Automated Labelling of Radiology Reports

Automated labelling of radiology reports using natural language processing: Comparison of traditional and newer methods

Principal Investigator: Dr Cheng Tim-Ee Lionel, Clinical Director (Artificial Intelligence), Future Health System Department, Singapore General Hospital

Automated labelling of radiology reports using natural language processing allows for the labelling of ground truth for large datasets of radiological studies that are required for training of computer vision models. This paper explains the necessary data preprocessing steps, reviews the main methods for automated labelling and compares their performance.

There are four main methods of automated labelling, namely: (1) rules-based text-matching algorithms, (2) conventional machine learning models, (3) neural network models and (4) Bidirectional Encoder Representations from Transformers (BERT) models.

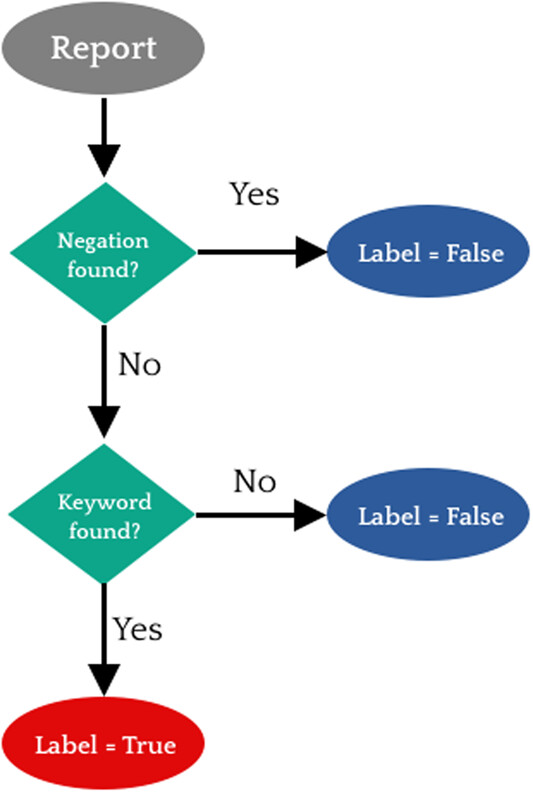

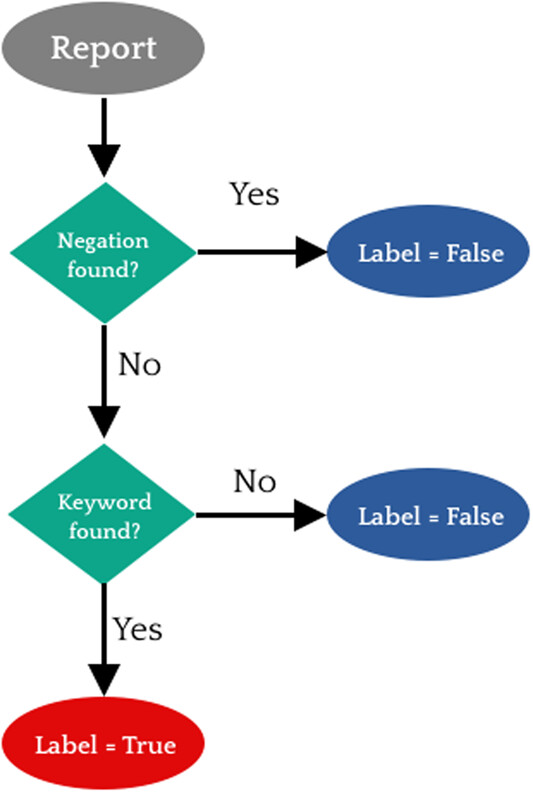

Rules-based labellers perform a brute force search against manually curated keywords and are able to achieve high F1 scores. However, they require proper handling of negative words. Machine learning models require preprocessing that involves tokenization and vectorization of text into numerical vectors. Multilabel classification approaches are required in labelling radiology reports and conventional models can achieve good performance if they have large enough training sets.

Machine learning models require preprocessing that involves tokenization and vectorization of text into numerical vectors. Multilabel classification approaches are required in labelling radiology reports and conventional models can achieve good performance if they have large enough training sets.

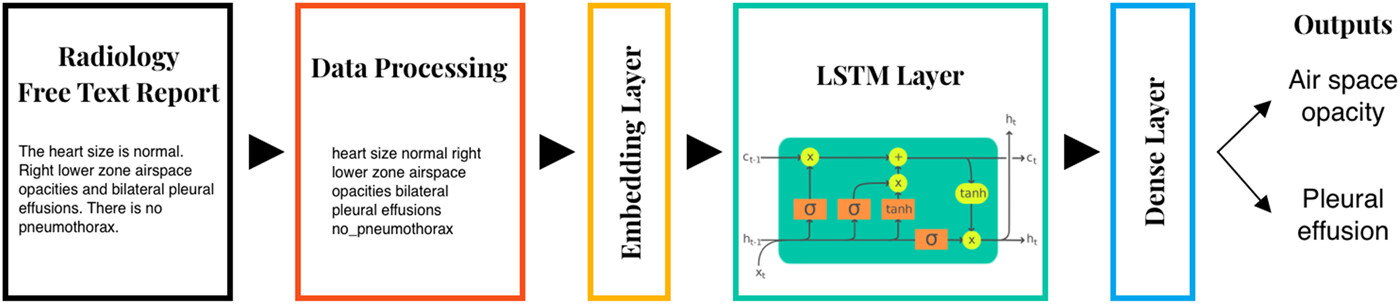

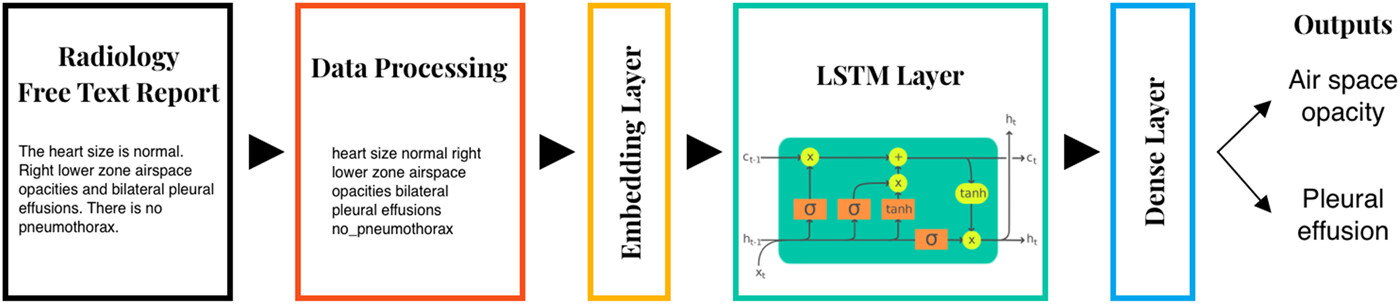

Deep learning models make use of connected neural networks, often a long short-term memory network, and are similarly able to achieve good performance if trained on a large data set. BERT is a transformer-based model that utilizes attention. Pretrained BERT models only require fine-tuning with small data sets. In particular, domain-specific BERT models can achieve superior performance compared with the other methods for automated labelling.

BERT is a transformer-based model that utilizes attention. Pretrained BERT models only require fine-tuning with small data sets. In particular, domain-specific BERT models can achieve superior performance compared with the other methods for automated labelling.

There are four main methods of automated labelling, namely: (1) rules-based text-matching algorithms, (2) conventional machine learning models, (3) neural network models and (4) Bidirectional Encoder Representations from Transformers (BERT) models.

Rules-based labellers perform a brute force search against manually curated keywords and are able to achieve high F1 scores. However, they require proper handling of negative words.

Deep learning models make use of connected neural networks, often a long short-term memory network, and are similarly able to achieve good performance if trained on a large data set.